One of the more recent additions we made to the Cozmo SDK is the ability to pull color images from Cozmo’s camera feed. This opens up a world of possibilities with the SDK including color-dependent games and behaviors. In our SDK example program, color_finder.py, we have Cozmo search his environment for a user-specified color, and then pursue it.

The Color Finder program in action.

Max Cembalest, our wonderful summer intern, wrote the program and will take you through his approach in creating it.

Hi, I’m Max! I’ll show you step by step how to make Cozmo recognize and chase a tennis ball.

There are four primary steps needed to create this behavior:

- Define a threshold for which an arbitrary RGB value—of which there are literally millions—will be defined as simply, say, yellow.

-

Locate “blobs” of the same color which, in this example, represent an object of that color.

-

Calculate the rotation angle needed for Cozmo to face the color object.

-

Managing the various action states of scanning for a color object, rotating Cozmo towards said object, and then moving towards it.

1. Approximating Color

For a first pass at determining color, I wrote a method on_new_camera_image that continuously captured Cozmo’s camera image many times per second as the event EvtNewCameraImage was triggered. Then I grabbed the RGB values of the pixel in the center of the image, and passed those RGB values into a custom method called approximate_color:

def approximate_color(self, r, g, b):

max_color = max(r, g, b)

extremeness = max(max_color - r, max_color - g, max_color - b)

if extremeness > 50:

if max_color == r:

return "red"

elif max_color == g:

return "green"

else:

return "blue"

else:

return "white"

approximate_color returns the maximum of {r, g, b} if that maximum is sufficiently larger than the other two colors, in this case, by a value of 50 or more; otherwise, the pixel is declared “white.” I tested the method by pointing Cozmo at blue and red images (Figure 1), and then printing out the result (Figure 2).

Fig. 1 The blue and red images used to validate the approximate_color method.

Fig. 2 The live output from the approximate_color method.

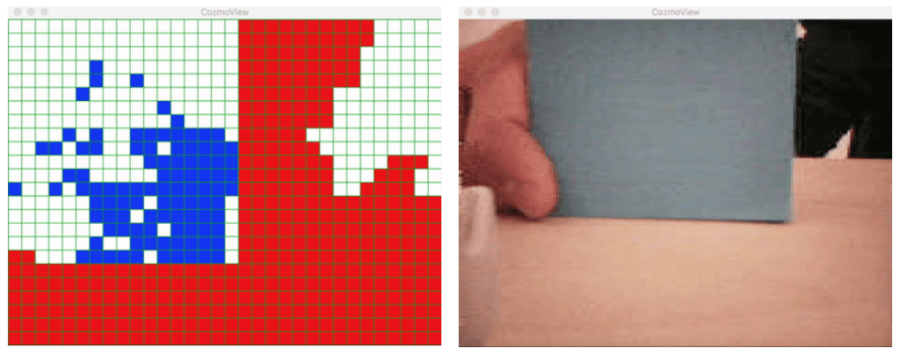

While the method worked well, it was only useful for simple images with clearly defined color boundaries. When using arbitrary camera images of the real world, the method consistently detected too much red. To mitigate this issue and make it easier for Cozmo to eventually recognize distinct color blobs, I downsized the camera image from 320×240 to 32×24 using a method from the PIL library and then used the results from applying approximate_color on the reduced image to create a 32×24 matrix (Figure 3).

![]()

Fig. 3 The 32×24 pixel matrix used to find color blobs.

2. Locating Color Objects

To locate color blobs in Cozmo’s view, I started with the standard connected-components algorithm, which counts how many distinct groups of connected equal-valued squares there are in a matrix.

A modification to the algorithm was needed, though, to uniquely identify each blob and keep track of which points are actually in the blob. To achieve this, I created a dictionary of unique {key : value} pairs, with each key being a unique number and each value being a list of points in the corresponding color blob.

I made a new BlobDetector class which took pixel_matrix from the ColorFinder class as input. The following algorithm determines, for every point in pixel_matrix, which blob to add it to:

- If the point matches the color of the point immediately to the left, we add it to the blob of that left point.

- Likewise, if the point matches the color of the point immediately above, we add it to the blob of that above point.

- If the point matches neither, we make a new blob for that point.

- But if the point matches both the above and left points, then we merge the left point’s blob and the above point’s blob (once we check that the left point and the above point don’t already belong to the same blob).

For each blob that the algorithm found I averaged the x and y value for all the points in that blob to approximate where the center of the blob resides in pixel_matrix and printed out each (x, y).

To make this process easier to visualize and debug, I made the ColorFinder class inherit from cozmo.annotate.Annotator. Now I could draw the contents of pixel_matrix onto the viewer window.

The coordinate system of the pixel_matrix starts with (0,0) as the top left point, and (31,23) as the bottom right point, so x values increase from left to right and y values increase from top to bottom. Even though the colors and shapes that Cozmo could detect were a bad approximation, the BlobDetector algorithm was working well: the (x, y) coordinates that printed out as Cozmo scanned the movement were correctly represented when I was moving the ball (Figure 4).

Fig. 4 Cozmo correctly tracking color.

3. Turning Towards the Blobs

Cozmo’s camera has attributes fov_x and fov_y which are angles that describe the full width and height of Cozmo’s field of view. So any perceived horizontal and vertical distance between a blob’s center and center screen can be expressed as angles which are fractions of fov_x and fov_y, respectively.

The exact values of fov_x and fov_y vary slightly between Cozmos because of minor manufacturing variances, but they are roughly 58 and 45 degrees, respectively. In this example image, the center of the blue blob is calculated to be 17.98 degrees to the right and 11.25 degrees up.

I called the following method every time I calculated the blob center. It uses the SDK methods robot.set_head_angle and robot.turn_in_place, which return Actions that activate Cozmo’s motors:

def turn_toward_blob(self, amount_to_move_head, amount_to_rotate):

new_head_angle = self.robot.head_angle + amount_to_move_head

self.robot.set_head_angle(new_head_angle, in_parallel = True)

self.robot.turn_in_place(amount_to_rotate, in_parallel = True)

I set Cozmo’s color_to_find to blue, and tested out the tracking using a blue notepad. It was clear the approximate_color method wasn’t strong enough to pick up all the blue (Figure 5).

Fig. 5 Tracking a blue notepad.

I noticed Cozmo’s reaction time was lagging a bit, but soon realized that I was essentially clogging Cozmo with conflicting actions. For instance, when I thought Cozmo should be turning left, he was completing a right turn because of a robot.turn_in_place call from 2 seconds ago.

To address this, I needed to make sure that Cozmo’s movements were only in response to current accurate information from his camera. So I assigned variables to store the current state of the action calls. This allowed me to only start action calls once all prior actions had been aborted.

I gave the ColorFinder class the attributes tilt_head_action and rotate_action, and added a new method abort_actions that aborts its input actions if they are active. My turn_toward_blob method now looked like this:

def turn_toward_blob(self, amount_to_move_head, amount_to_rotate):

self.abort_actions(self.tilt_head_action, self.rotate_action)

new_head_angle = self.robot.head_angle + amount_to_move_head

self.tilt_head_action = self.robot.set_head_angle(new_head_angle, in_parallel = True)

self.rotate_action = self.robot.turn_in_place(amount_to_rotate, in_parallel = True)

This resulted in a much better response time.

A Note on Color Ranges

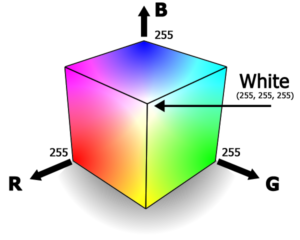

Colors in RGB space have three components between 0 and 255, allowing you to place all the colors as points inside a cube. This allows you to define the “closeness” of colors as the Euclidean distance between them as points inside the RGB cube.

As you can see from the picture, there are large chunks of the cube that should satisfy the high-level condition to be “red,” “green,” or “blue.” So I defined a set of color ranges in the format (min R, max R, min G, max G, min B, max B).

To approximate a color, I measure its distance from all of the color ranges and settle on the range whose distance was shortest. Measuring the distance from a color to a color range is the same as measuring the shortest distance from a point to a cube. Because we are looking for the smallest distance, we can compare the squared distances and save having to calculate the square root.

While not perfect, it reduced the amount of red noise in the background color, and individual objects became a bit more separated in the approximation — enough for Cozmo to locate and chase distinct colors.

4. Managing Action States

To get Cozmo to drive towards an object that matches his color_to_find, I wanted a way to move between the following three states:

- Look around: Cozmo does not see any blobs that match his

color_to_find, so he runs theLookAroundBehavior. - Found a color: Cozmo sees a blob that matches

color_to_find, but is still rotating and tilting his head to get the blob to be stable in the center of his view. - Drive forward: Cozmo sees a blob that matches

color_to_find, so he drives forward.

To do so, all that was needed was to add an attribute to the ColorFinder class called state, and at key points in the life cycle of the program, call the appropriate reaction based on the current value of state.

Changing between look_around_state and found_color_state can be done purely from within on_new_camera_image, since those transitions are based on what BlobDetector is picking up from the continuous camera feed.

The transitions in and out of drive_state are different, though. After every one second elapsed, I would check the total sum of all the angles Cozmo had turned in that second. He only drives forward if that sum is below a threshold. Otherwise, he would remain in found_color_state.

Cozmo could drive now, but the program didn’t work with the color yellow yet as as I needed to further reduce the amount of overall red and yellow in the image—the most common colors found in the camera image due to my particular lighting conditions.

Last touches

Color Balancing

I found an algorithm called Gray World which balances the color in an image by reducing the average color across the whole image to a neutral gray. Using it lowered the overall amount of red and yellow. It isn’t a perfect color-balancing algorithm because it may not be the case that the average color across the image should actually be perfectly gray (e.g., in an image of the sky the average color might be blue), but for my purpose, it was more than sufficient.

Remembering past blobs

Whenever Cozmo finds a blob, he stores the coordinates of the blob’s center in a variable called last_known_blob_center. So when no blobs are visible, Cozmo turns in the direction of last_known_blob_center, but with the magnitude of rotation scaled up. That way, he may slightly overshoot the object, but find it again while rotating past it.

Converting RGB to HSV

The last modification I made was processing color in HSV (hue, saturation, value) instead of RGB. The hue component specifies the color, the saturation specifies how much white is in the color, and the value specifies the brightness.

When I calculated color distance using HSV space instead of RGB space, the tennis ball was interpreted as almost entirely yellow, whereas before only the brightest parts of the ball were seen as yellow. That is, using HSV can be very useful in identifying the same color in dynamic lighting conditions.

In Closing

An application like this is never finished. There are always refinements to be made, features to added, and so on. Off the top of my head, I’d love to see someone take the code and have Cozmo white balance the image when the program starts, using the known color of a marker object. Another idea I’d love to see implemented is to evolve the program into a game of fetch, where Cozmo pushes the ball back to the place he started at.

You can get the color_finder.py example program, as well as all of the others, from our Downloads page. Give it a go, and let us know what you think in the comments below!

Great writeup and demo (I love the laugh at the end. It’s the “Cozmo Effect”.) I see another new video too!

p.s. Happy AnkiSDKForumCakeDay! (Still lots of science to be done.)

Wow, this is great ! Tons of funny things to create with this…

Thank you so much for an amazing SDK application!

Great! I am planning to use fast-rcnn to recognize face or something else instead. This demo provides a base framework for me. Thanks a lot.

can you make Cozmo recognize the color of cube lights